Do they want me to stop using computers altogether?

In the long run, we’re all dead. Meanwhile, can we still enjoy life and computers, or should we bend to the new religions, including the containerization of everything? Containers might look optimal, but they can sometimes be the wrong solution—just look at the container congestion in the US ports (they all come from Asia).

In Why Linux on the Desktop is Irrelevant in the Long Run, I mentioned some aspects of this trend that bothers me. The various virtualization, containerization, and sandboxing technologies are not new, and they’re not necessarily bad, but I strongly disagree with their use in personal computers. Especially AppImages, Flatpaks and Snaps remind me of the old “fix” to “DLL Hell” that meant each program was installed with its own set of DLLs, so that there were countless duplicates of the same libraries on a single PC. I don’t care that disk space is cheap; what I care of is that such solutions are no true solutions; they’re “brute-force” solutions and, to use something Greta Thunberg would say if she weren’t so dumb, they’re unecological. They’re the opposite of “less is more,” for their governing principle is “let’s use a bigger hammer” (sometimes masquerading as a set of smaller ones, nicely wrapped in environmental-friendly, plastic-free damping material, so they can’t hurt you).

While browsing Planet Fedora, I ran over this post by Máirín (sort of Maureen) Duffy: A new conceptual model for Fedora.

I first heard of Máirín back in 2006, when artwork for Fedora Core 6 and 7 were discussed. What surprises me is that, despite not being a software developer, she’s all into the new directions promoted by Red Hat, and she seems enthusiastic about them.

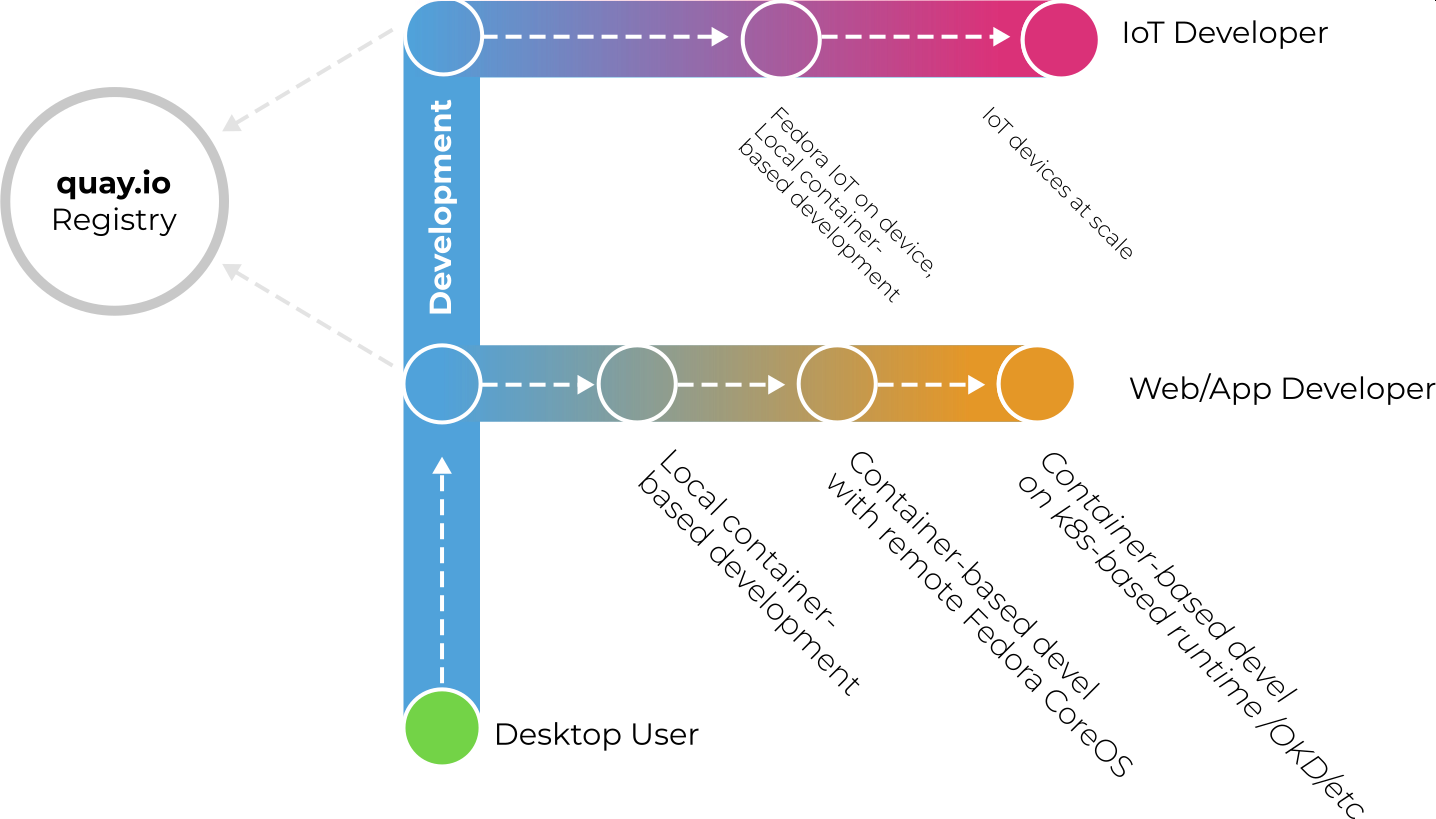

Maybe I tend to panic too easily, but here’s the picture for the Fedora “F” Model meant to improve the web presence and to clarify the message, as in “what is Fedora and what can I use it for?”:

And here’s the comment I wrote on her post (still awaiting moderation as I’m writing this — UPDATE: my comment hasn’t been approved, but deleted! There is no such thing as intellectual honesty.):

«They come to Fedora looking for a great Linux-based desktop operating system. Ideally, by default, this would be the container-based Silverblue version of Fedora’s Desktop.» — Well, if you want to scare away the users, do it, make Silverblue the default. If you want them to stop using Fedora, or to stop using Linux, please do.

People need a replacement for that bloated crap that is Win10/Win11, but most people do not need an immutable core OS, and they don’t need Flatpaks. They do not want Flatpaks!

Most people (myself included) want a traditional OS with a traditional package management.

With all due respect, I know who you are since 2006 (FC6), but precisely because I know who you are, I hope they won’t listen to you.

Your Fedora “F” model looks to me like the traditional Desktop user is “the retarded user who is so passé because they don’t want to go for the more modern technologies”! I understand what Silverblue is, and this is why I don’t want it for me. As a power user of everything I own, I want to do whatever I want with my OS, I want to be able to royally screw it. Heck, I am so much of a luddite that I’d very much want to be able to run a Linux desktop like I was doing it back in 1995-1996, i.e. with a 66 Mhz CPU and 4 MB of RAM. I want minimalism, and Silverblue is anything but.

Let me give you an example from another distro, from what I encountered back in July:

– Sublime Text, package-based: 16.2 MB to download, 49.1 MB of disk space required

– Sublime Text, Flatpak-based: 1.0 GB to download, 3.3 GB of disk space required

Now, try to persuade me that I need Silverblue + Flatpaks. I’d rather use Windows 11 or kill myself rather than resorting to that kind of library duplication.

The Desktop User is not central to their model; it’s peripheral and prone to become irrelevant. Even if this won’t be the case too soon, let me copy here from the synthetic description I gave to Fedora Silverblue:

a hybrid image/package system is still possible, but it is recommended to avoid package layering, except for a small number of apps that are currently difficult to install as Flatpaks. This might be the future, as it makes the base OS unbreakable, and it uses packages as an exception to Flatpaks, instead of using Snaps, Flatpaks and AppImages as an exception to packages.

Not in my house.

I never tried Fedora Kinoite, the “close sibling of Fedora Silverblue” (as someone said), because it doesn’t have a Live ISO (which is ridiculous for a 2.8 GB ISO!), and I couldn’t be bothered with installing it. To me, both Silverblue and Kinoite are meant for the Metaverse they, the technology companies, are building for us. They’re nightmarish for people who weren’t born after the last rain, and I suggest you to watch a couple of videos:

■ Timothée Ravier on Jun 29, 2021: Akademy 2021 – Kinoite: a new Fedora Variant with the KDE Plasma

■ Ermanno Ferrari on Apr 29, 2021: Fedora Silverblue: An Immutable OS

They made me wish I was still running Windows for Workgroups 3.11.

Speaking of the concept of immutability in an OS (e.g. Clear Linux, Fedora Silverblue and Kinoite), here’s a nice propaganda item: How macOS is more reliable, and doesn’t need reinstalling.

More reliable than what?! Oh, than itself in the past. From Catalina to Monterey, the changes made in macOS made it more suitable for a mobile device than for a fully-fledged computer; or, rather, they made it more suitable for people who don’t care how their “software-powered tin box” works. For dummies, yeah.

Such design features might sound great, but they’re restrictive, and against the KISS principle.

Copy-on-write in AFPS? That’s advanced indeed, and to be found in ZFS and Btrfs too. But I hate copy-on-write, because it creates fragmentation. Oh, “Apart from speed, SSDs brought additional benefits, of increased reliability and their lack of ill-effects from fragmentation.” They don’t know what they’re talking about! Let me tell you what’s happening in real life with a SSD: with or without TRIM, a SSD’s controller will almost continually defragment the SSD when idle, especially when it’s at least, say, 80% full.

Make this experiment: delete a large file on a SSD. Were it on an HDD, you’d be able to recover it right away, e.g. using Recuva on Windows. Now, with a SSD, just sit 30 seconds without doing anything (also, no download, no processes requiring disk I/O), and try to recover that file that, in theory, shouldn’t have been written by anything else. Chances are that the automatic defragmenting of some other large file already made it unrecoverable, although nobody issued any TRIM command! Yeah, this is how a modern SSD works, and moreover so with the extra fragmentation created by copy-on-write!

As for the immutability of the OS, with the Sealed System Volume (SSV), I expect this to land in Windows 12 someday. Keep in mind that APFS and SSV require an SSD, so it’s obvious the fact that they’re anything but KISS. Windows 10 was slow as molasses on an HDD from day one, so basically all these “modern” concepts are requiring “advanced technology” just for your John Doe to be able to fart happily while using an OS much smarter than his mother.

LATE EDIT: Just like the eMMCs (embedded, or flash drives and memory cards), the SSDs have a problem that the HDDs don’t have: data cannot be directly overwritten as it can in a hard disk drive. To modify or write a 4KB block, a 256KB block has to be erased and written again! See Write amplification in Wikipedia. (They use the term “page” for 4KB and “block” for 256KB, while any logical creature would have used “block” for 4KB and “page” for 256KB. The software engineers are becoming increasingly stupid.)

The idea is that the SSDs are self-sabotaging their reliability through this write amplification. Now, think again about the effect copy-on-write has on this amplification. (Of course, it depends on how copy-on-write is implemented; what I know about Btrfs is that, despite it having automatic defragmentation, it performs poorly on drives that are almost full, so what’s the purpose of using a SSD if one cannot use it at full capacity, and if it has its wear leveling sabotaged by explicit defragmentation in the file system, while the SSD’s controller also has its implicit defragmentation? Ever since LBA replaced CHS in HDDs, the storage structure as seen by the OS has nothing to do with its actual structure, only known to the controller.)