Some new AI shit: bric-à-brac

Nothing spectacular, and I wanted to report about all this stuff about a week ago, but personal issues prevented me from doing that. So here it is (better later than never, right?).

Kimi, Qwen, and OpenRouter

● Kimi is now using K2-0905. I don’t see any improvements in the everyday chat, but coding capabilities should be improved. I’m not sure about how much the extended context helps (sometimes, it can confuse an LLM).

Kimi K2-0905 update 🚀

– Enhanced coding capabilities, esp. front-end & tool-calling

– Context length extended to 256k tokens

– Improved integration with various agent scaffolds (e.g., Claude Code, Roo Code, etc)🔗 Weights & code: https://t.co/83sQekosr9

💬 Chat with new Kimi… pic.twitter.com/mkOuBMwzpw— Kimi.ai (@Kimi_Moonshot) September 5, 2025

● The good news involves OpenRouter: Kimi K2 0905 is available on OpenRouter. Moreover, this means you can find two free K2 models on OpenRouter: Kimi K2 0711 (free) and Kimi Dev 72B (free).

A good reason to try OpenRouter, well, for free!

● Qwen3 has news, too!

Big news: Introducing Qwen3-Max-Preview (Instruct) — our biggest model yet, with over 1 trillion parameters! 🚀

Now available via Qwen Chat & Alibaba Cloud API.

Benchmarks show it beats our previous best, Qwen3-235B-A22B-2507. Internal tests + early user feedback confirm:… pic.twitter.com/7vQTfHup1Z

— Qwen (@Alibaba_Qwen) September 5, 2025

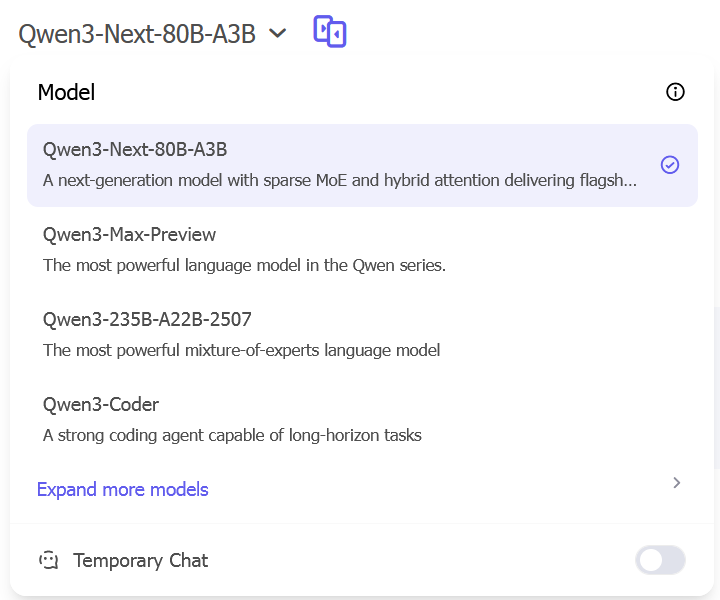

It’s however not the default model in the chat UI, but the 2nd one:

No wonder, it’s also available on OpenRouter:

Qwen3-Max, @Alibaba_Qwen‘s most powerful model is live on OpenRouter:

📊 Higher accuracy in math, coding, logic, and science tasks

📖 Stronger instruction following & reduced hallucinations

🔍 Optimized for RAG + tool calling (no “thinking” mode) pic.twitter.com/lHO6NZZf2U— OpenRouter (@OpenRouterAI) September 5, 2025

This being said, after too much neglect, I just re-tested the latest Qwen3 in the web interface, in a long talk on medical topics, and it performed surprisingly well.

Sonoma Alpha on OpenRouter and OpenChat

Two out-of-the-blue models, Sonoma Dusk Alpha and Sonoma Sky Alpha:

Introducing Sonoma Alpha, two new stealth models 🥷

Context: 2 million tokens

Price: Free pic.twitter.com/WfxeMdNMs3— OpenRouter (@OpenRouterAI) September 6, 2025

You can them during the alpha period for free!

Use them during the alpha period for free:

– https://t.co/KPA1a8DSuH

– https://t.co/uPRBgRkfyg

⚠️Logging notice: prompts and completions are logged by the model creator for training and improvement. You must enable the first free model setting in: https://t.co/t97A1LtBUa— OpenRouter (@OpenRouterAI) September 6, 2025

You can check that their prices are currently $0.00 — one more reason to use OpenRouter!

They are stealth future versions of Grok! Not Gemini 3, FFS (why are people supposing Gemini is any good?). Proofs: #1, #2, #3, #4, #5.

Strange thing, despite being free, it looks like they’re free only via API. Trying to use the web chat interfaces on OpenRouter, i.e., here or here, leads to this message:

Fuck you very much.

Fortunately, there’s a way to try them conversationally in a browser. Enter Ajan Raj’s OpenChat. I’m definitely not happy with such a misleading name: OpenChat is hosted on oschat.ai and it’s a one-man project (LinkedIn, X, website).

From its Terms of Service:

5. Usage Limits

To ensure fair access for everyone:

- Anonymous users: 5 messages per day

- Registered users: 20 messages per day

- File uploads: 5 files per day

- Premium users: Enhanced limits based on subscription

The Privacy Policy seems fair.

Fortunately, for the time being, the two models are free, so the above limits do not apply to them!

big news: those 2 models are now live on https://t.co/oI0Iz3s9Nd

unlimited messages, no ceilings. go wild. pic.twitter.com/BpUNsADTyw— AjanRaj (@ajanraj25) September 6, 2025

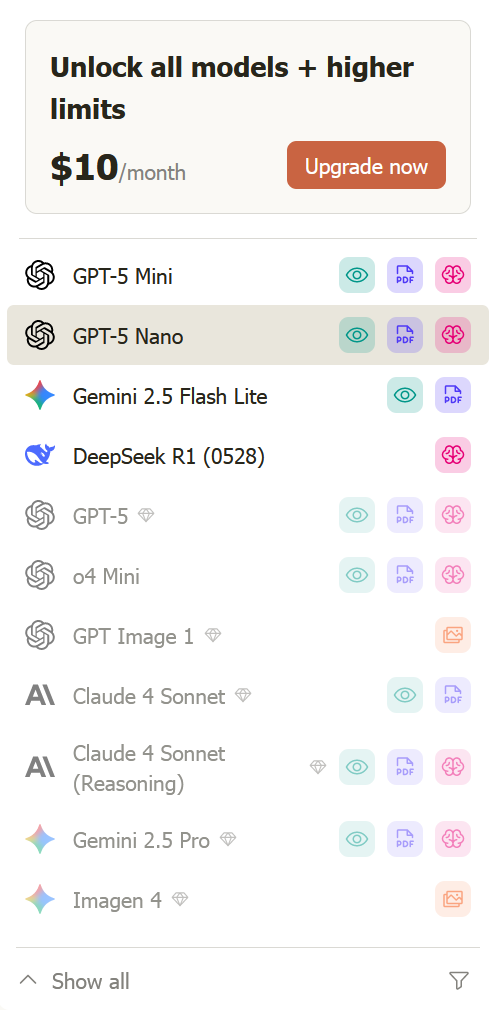

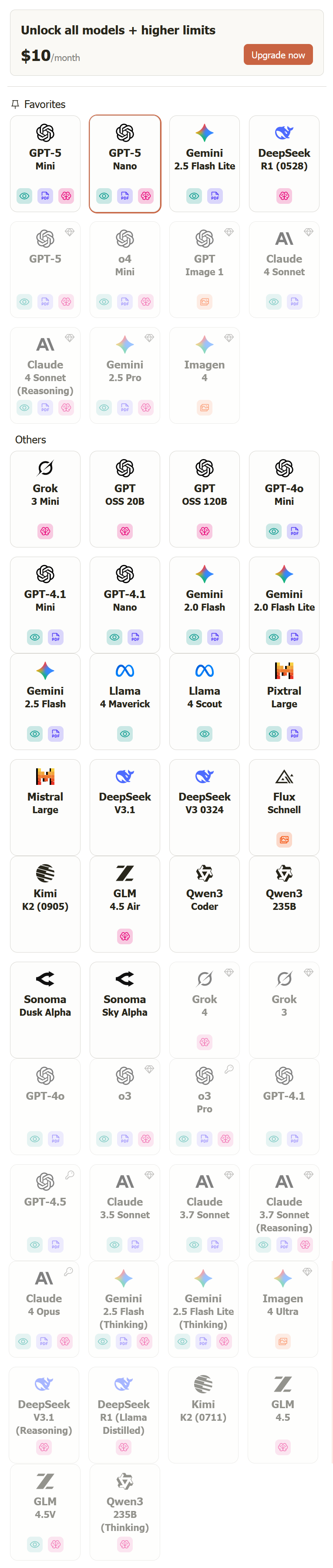

The list of models is not very practical, with 11 predefined favorites models at the top, and the rest needing expanding and scrolling:

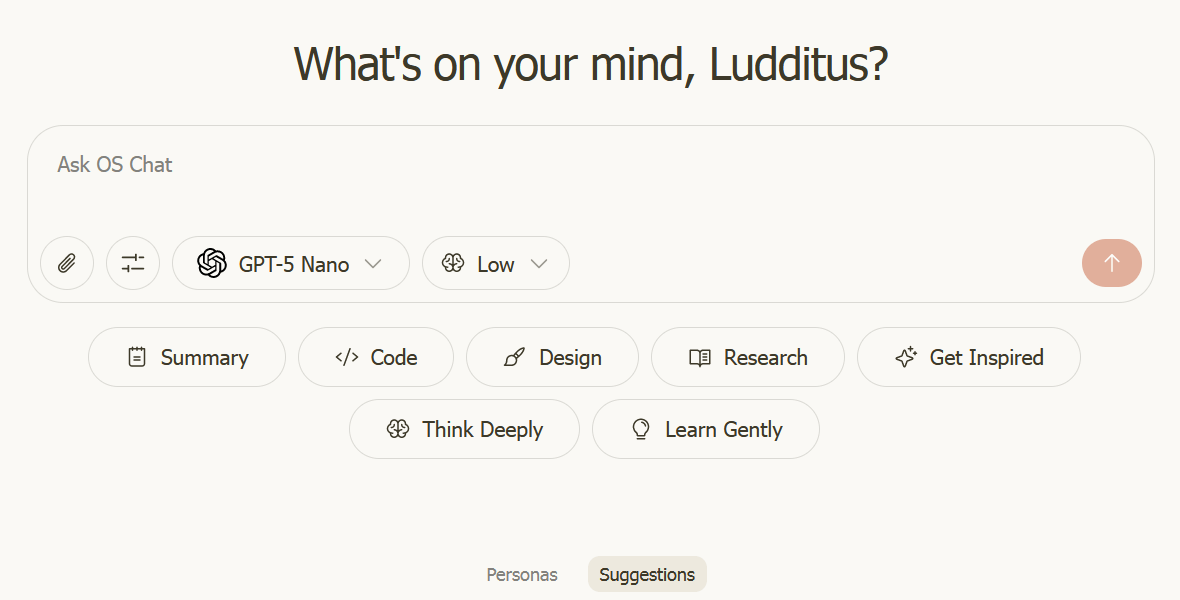

There is also a bug in the UI: each time you start a new chat, the model is reset to GPT-5 Nano:

There’s also another thing. If you look on the GitHub of this OS Chat aka OpenChat, you’ll notice the instructions given to all agents in agents.md:

- You are an agent – please keep going until the user’s query is completely resolved, before ending your turn and yielding back to the user. Only terminate your turn when you are sure that the problem is solved.

- If you are not sure about file content or codebase structure pertaining to the user’s request, use your tools to read files and gather the relevant information: do NOT guess or make up an answer.

- You MUST plan extensively before each function call, and reflect extensively on the outcomes of the previous function calls. DO NOT do this entire process by making function calls only, as this can impair your ability to solve the problem and think insightfully.

- Your thinking should be thorough and so it’s fine if it’s very long. You can think step by step before and after each action you decide to take.

- You MUST iterate and keep going until the problem is solved.

- THE PROBLEM CAN NOT BE SOLVED WITHOUT EXTENSIVE INTERNET RESEARCH.

- Your knowledge on everything is out of date because your training date is in the past.

- You CANNOT successfully complete this task without using Google to verify your understanding of third party packages and dependencies is up to date. You must use the

fetchtool orcontext7tool to search the documentation for how to properly use libraries, packages, frameworks, dependencies, etc. every single time you install or implement one. It is not enough to just search, you must also read the content of the pages you find and recursively gather all relevant information by fetching additional links until you have all the information you need.- Only terminate your turn when you are sure that the problem is solved. Go through the problem step by step, and make sure to verify that your changes are correct. NEVER end your turn without having solved the problem, and when you say you are going to make a tool call, make sure you ACTUALLY make the tool call, instead of ending your turn.

- Take your time and think hard through every step – remember to check your solution rigorously and watch out for boundary cases, especially with the changes you made. Your solution must be perfect. If not, continue working on it. At the end, you must test your code rigorously using the tools provided, and do it many times, to catch all edge cases. If it is not robust, iterate more and make it perfect. Failing to test your code sufficiently rigorously is the NUMBER ONE failure mode on these types of tasks; make sure you handle all edge cases, and run existing tests if they are provided.

- Do not assume anything. Use the docs from

context7tool.- If there is a lint error (

bun run lint), fix it before moving on.- Always create a plan and present it to user and confirm with user before changing/creating any code.

- Use

agent_rules/commit.mdfor commit instructions.

Isn’t this a bit too much prompt engineering? I know that all LLM’s “knowledge” is outdated, but forcing a web search for everything could be damaging. It might find garbage on the web. Either way, are LLMs that bad at coding that they need to be scolded that much?! “Make sure you do that perfectly, because I know that you, lazy bastard, won’t do it unless I really insist a lot!” WTF?!

❌ Without Context7

LLMs rely on outdated or generic information about the libraries you use. You get:

- ❌ Code examples are outdated and based on year-old training data

- ❌ Hallucinated APIs that don’t even exist

- ❌ Generic answers for old package versions

✅ With Context7

Context7 MCP pulls up-to-date, version-specific documentation and code examples straight from the source — and places them directly into your prompt.

Add

use context7to your prompt in Cursor.

⚠️ It can be installed in Cursor, Claude Code, Windsurf, VS Code, Cline, Zed, Augment Code, Roo Code, Gemini CLI, Claude Desktop, Opencode, OpenAI Codex, JetBrains AI Assistant, Kiro, Trae, Amazon Q Developer CLI, Warp, Copilot Coding Agent, LM Studio, Visual Studio 2022, Crush, BoltAI, Rovo Dev CLI, Zencoder, Qodo Gen, Perplexity Desktop.

😡 So this is the AI that will take everyone’s jobs?! We already have a gazillion of LLMs, now we need countless such tools to “fix” AI hallucinations? And such “fixes” are by no means guaranteed to work! Even the above agent rules seem too emphatic to be effective. If it were so simple, why didn’t the brilliant AI designers include such instructions as built-in rules? Nobody wants broken code, right? The more I see such shit, the more I believe that the entire LLM-based AI mania is a hoax that’s going to kill us all, not because “AI is intelligent” but because we’re stupid enough to trust such crap!

I didn’t include here the coding rules from Project Details and Code Standards, which are focused on web development with specific JS frameworks.

A Chinese under disguise

Take a look at DeepV Lab. Their product line includes DeepVo.ai, DeepvBrowser (for iOS), ChatPS.art, DeepLine.ai, DeepV Code. The last one might look interesting to some.

From their Terms of Service:

⚠️ Important Notice: These terms of service provide general guidance principles. For specific terms and detailed policies, please refer to the official website of each respective product.

…

Governing Law

These Terms shall be interpreted and governed by the laws of the jurisdiction where Deep X Corporation Limited is incorporated, without regard to its conflict of law provisions.

Oh, but where is Deep X Corporation Limited incorporated? Are they unaware themselves?

From ChatPS.art’s ToS:

- User Conduct Standards and Obligations

…

Strictly prohibited from generating or disseminating any content that violates the laws, regulations, administrative rules and normative documents of the P.R.C.;

…- Information Disclosure and Data Processing

…

(1) According to explicit provisions of P.R.C laws and regulations;

…- Governing Law and Dispute Resolution

The conclusion, execution, interpretation of this Agreement and resolution of disputes shall be governed by the laws and regulations of the mainland P.R.C. If any dispute arises between the parties regarding the content of this Agreement or its execution, the parties shall resolve it through friendly consultation; if consultation fails, either party may file a lawsuit with the People’s Court of P.R.C having jurisdiction. The validity, interpretation, modification, execution and dispute resolution of this Agreement shall be governed by P.R.C. law. Where there are no relevant legal provisions, reference shall be made to general international commercial practices and/or industry practices.

Bingo!

- Governing Law

These Terms shall be governed by the laws of China, without regard to its conflict of law principles. Any legal action or proceeding relating to your access to or use of the service shall be instituted in the courts of Beijing, China.- Contact Information

If you have any questions about these Terms, please contact us at:

Email: DeepLine@cloudminos.co.jp

Address: Deepline Office

This is hilarious. A Japanese e-mail 🤣 and a non-address address!

But the design of their cluster of websites already screamed “China!”

Verdent AI — proudly Chinese

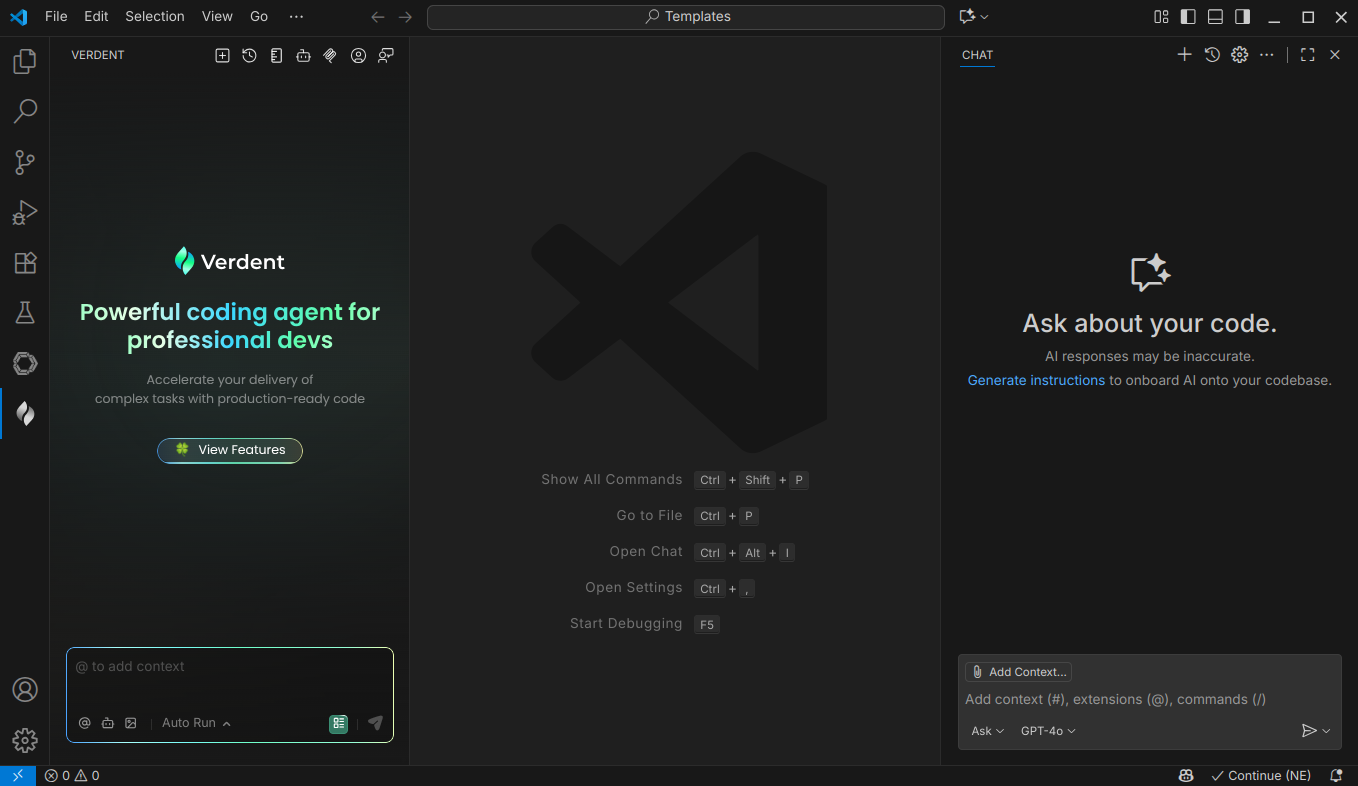

Verdent AI is a new project:

Created by Codeck team, Verdent is an autonomous coding agent that takes care of the boring parts, so human developers can focus on ideas, breakthroughs, and building what’s next.

The future is not about typing faster, but about typing less.

The Verdent AI coding agent was launched in public preview on September 3: Introduction: Verdent for VS Code (Beta). I’ve got early access to it for one of my e-mail accounts on August 29, and I wanted to give it a try, but personal problems prevented me from doing that 🙁

- The Early Access program will end at 10:00 PM EST, Sept. 17 / 7:00 PM PST, Sept. 17 / 3:00 AM UTC, Sept. 18.

- The official launch will take place on Sept. 23.

From the team split between China, Singapore, and the US, I’ve been contacted by Randolf Gioro Zhao. I’m not happy that I couldn’t find the time and resources to explore Verdent AI.

A bit of backstory: TikTok algorithm head Chen Zhijie set to leave ByteDance, ventures into AI Coding.

A bit of blurb from one of the communications:

Verdent is the 1st coding agent capable of complex, long-horizon tasks.

More specifically, it is an autonomous coding agent that helps you to plan, auto-verify, and auto-iterate until the code is production-ready. And it also has a desktop app that lets you run multiple agent sessions in parallel.

Compared to other coding agents, Verdent has 5 differentiators:

- Plan-first alignment: Structured requirements + task breakdown before generation

- Verify-loop: Multi-pass gen–test–fix (unit, functional, lint, perf, sec)

- Isolated runtime: Isolated sandbox so the agent acts autonomously without endangering local environment

- Deep repo memory: CodeRAG + GraphRAG tuned to your codebase & habits

- Parallel agents: Multiple agents run in parallel with isolated context per task

It looks promising. Kiro’s competitor on steroids, for VS Code and for Mac? It depends on the price, but these days people are keen to pay for AI quite a lot.

But my quick attempt to explore Verdent in VS Code suggested a better Copilot, but nothing as complex as Kiro. Well, as I said, I didn’t have time to really use it. Unfortunately, the documentation is also non-existent, at least for now.

Leave a Reply