Why Linux on the Desktop is Irrelevant in the Long Run

10-15 years ago, there were many more Linux distros, and a lot of enthusiasm around Linux. Vibrant communities, positive vibes, unlike today. And most people were still dual-booting with Windows, usually for games (Steam for Linux was first released in 2013). Today, many are using Linux to revive old laptops, and there are some lunatics who want a Windows-free new laptop or desktop. This isn’t going to have any future, and there are several reasons for that.

First concept to die: KISS

KISS is not what you get by using a tiling window manager in Linux. What you get in such a case is minimalism and, in some cases, an increased productivity. KISS is also not obtained by resisting systemd; such a tenacious protest is more like a religious cult.

The concept of a Linux distro for our desktops is an antiquated one. What happens for some time now is the containerization of everything, and the gradual build of the irrelevancy of Linux on the Desktop.

It all started innocuously. People were using virtual machines to run Windows within Linux, or Linux within Windows. There’s nothing wrong with virtual machines in a professional environment, but it’s anything but zen on a personal system. But hey, more and more people use SUVs even to move their ass to the supermarket, and even a modest car such as the Ford Kuga 2021 is close to a T-34 in size and weight, so who would understand that a Fiat 500 is a valid and elegant choice for all purposes except a family car? (Electric SUVs weight even more, to kill as many as possible of “the others” in a crash with another vehicle.)

Originally, it was pure madness: without proper virtualization support in the CPU, pure software emulators such as Basilisk II and Bochs were incredibly slow, but I have used them both ~20 years ago. DOSBox isn’t a problem, as it’s specifically used to run older DOS games (abandonware) on modern hardware, and the slowness is even an intended feature.

But the true hypervisors have changed the game:

- The hosted (Type-2) ones that can run any major OS while in a full graphical environment of another major OS are used by everyone, especially VirtualBox and VMware Workstation Player. (I’m not sure why Microsoft killed Virtual PC, and Parallels killed their Workstation for Windows and Linux.)

- The bare-metal (Type-1) ones are not meant for the end user, and they include Hyper-V, and solutions based on KVM and Xen.

- QEMU has several operating modes, from two emulation modes that are purely hosted, to KVM and Xen hosting, which are hybrid modes.

Then you have ways to manage such virtual machines, such as GNOME Boxes, libvirt, virt-manager, or Vagrant. The latter also supports Docker containers (see below).

Even only with that, where’s the KISS principle? The KISS principle for an end user’s personal desktop means that the respective user should install and use one instance of an OS and nothing more on a single machine!

Acceptable detours from KISS are compatibility layers, such as WINE for Linux (to run Windows binaries), FreeBSD/NetBSD compatibility layers (to run Linux binaries), and… WSL (Windows Subsystem for Linux)!

Actually no, WSL is a vile ruse. Microsoft uses WSL as an embrace, extend and exterminate tactic against Linux, the idea being, “why would you install a Linux distro when you can have it here in Windows 10?”

But there’s more to it

Containers. Orchestration. Big words and big bucks.

The same way software developing is not the algorithms and the Python useless academic programs your kid learns in school, the main purpose of an operating system today is not to be a Linux desktop, a Windows 10 Home machine, or a macOS waste of money. OK, networking is still networking, plus Cloud, plus security. If you’re pushing your children in a career in IT, you’re killing their brains and their common sense. Let your kid digest this kind of language before chosing a career:

Docker Datacenter brings container orchestration, management and security to the enterprise. Docker delivers an enterprise-grade IT Ops managed and secured environment and empowers developers to build and ship applications in a self service manner.

The Docker Datacenter technology provides an out of the box CaaS environment with integrated workflows to build, manage and deploy applications with open standards and interfaces to allow for easily customizing to your business and your application. With an integrated technology platform that spans across the application lifecycle with tooling and support for both developers and IT operations, Docker Datacenter delivers a secure software supply chain at enterprise scale.

And this is actually one of the most legible and intelligible descriptions of a modern software technology! Most techno-blabber you’ll find on a product’s website is the crappiest possible crap!

Here too, it all started at a harmless level. FreeBSD jails, Linux chroot jails, elementary things even I was able to use.

Then, OS-level Linux virtualization solutions started to include LXC (Linux Containers) and OpenVz (Open Virtuozzo), the latter being more like a virtual server.

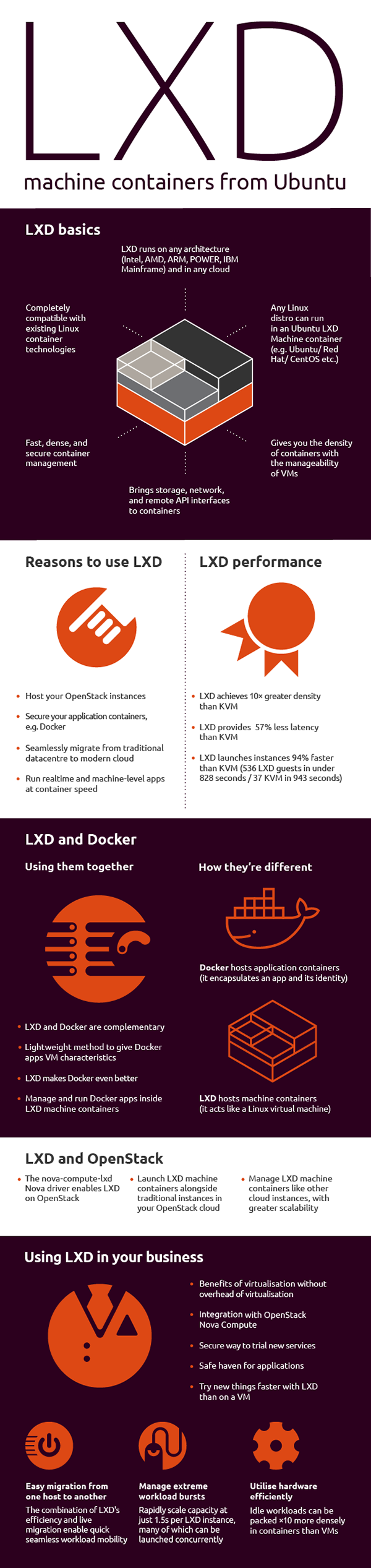

Now everyone is into Docker (app containers), orchestrated through Kubernetes, OpenShift, Portainer or the likes (because Kubernetes has stopped supporting Docker directly). For LXC, there’s also LXD (Linux Container Hypervisor) from Ubuntu:

While containers are a good invention, I believe they’re already overused. To some minds, they might look like conforming to the KISS principle, but to me it’s complexity for the sake of modernism, even when using LEGO-like software components. Linux package maintainers build packages in containers, not in chroot jails: we’re not in the last century anymore. For many other tasks, people use containers.

For the non-professional, the containerization even has its poor brother called sandboxing: Windows 10 now has sandboxing, but the standard solution was to run a web browser in Sandboxie, to prevent the possible effects of a zero-day security vulnerability. (On Linux, sandboxing solutions include AppArmor and Firejail.) And this is definitely anti-KISS! It’s already bad as it is that the heaviest software application on a modern home computer is the web browser, mainly because web pages aren’t HTML + images + CSS anymore, but cesspools with shitloads of JS that require huge amounts of CPU and RAM for nothing, now people should make things even more convoluted through sandboxing, virtualization and whatnot?

Yes, this is the future.

Consider the package managing

The concept of packages doesn’t only apply to Linux and *BSD, and it’s not only use regarding system components. Most software developers use package managers even under Windows: NuGet, chocolatey, scoop, winget. Is this progress, or yet another kind of containerization? (The Luddite that I am can only give an easy-to-guess answer to that.)

A sort of containerization was even the avoidance of the DLL Hell through letting each application have its own set of DLLs, thus having several copies of the same DLLs on disk and in RAM; later, portable apps became more and more popular, and what is this if not the refusal of using the normal OS architecture?

In Linux, this has led to technologies that want to avoid the risks of circular or broken dependencies. Who cares about APT, Yum, DNF, Zypper, Pacman, pkg, or even eopkg and XBPS? Who cares about ports, Portage, or AUR? Things can be different here too.

In the old-school Linux distros, which are still a majority, there’s been an infestation with Snaps, Flatpaks, and AppImages. AppImages have been around longer than Flatpaks and Snaps, and they’re more universal, yet almost nobody uses them nowadays. Maybe that’s because Canonical is behind Snaps and Red Hat is behind Flatpaks (“The Future of Apps on Linux!”). They’re all crap, usually run sandboxed (Flatpaks are the most restrictive), and they make a hodgepodge from a typical Linux system. Furthermore, AppImages and Snaps remain compressed at all times, thus such an app is slow to start. Snaps also cannot always observe the GTK/Qt theming of the host system, but they’re forcefully pushed into Ubuntu (even Ubuntu MATE has several preinstalled Snaps, including the default theme and the Welcome app!). Even LXD must be installed from a snap file! The solution must be elsewhere.

The naive approach started probably with distros like GoboLinux, in which each program has its own directory tree (portable app, much?). Screw the FHS! Now you also have NixOS and Guix System, in which packages are isolated from each other. Some advantages of this approach, beyond the techno-blabber (transactional upgrades and roll-backs, declarative system configuration, etc.): the impossibility of any package to break another package and, especially in Guix, the unprivileged package management. Why should you impersonate the root through sudo to install a package? To me, this looks something that could be seen by many users like an annoyance! (PackageKit can be used to avoid asking for credentials, but not all distros are making proper use of it.) Another similar approach is JuNest (Jailed User NEST), based on Arch Linux.

A peculiar approach is Homebrew (which now integrates Linuxbrew). Don’t start me on it. It’s blasphemy! And there’s even worse in terms of madness: Whalebrew, i.e. Homebrew, but with Docker images. Whalebrew creates aliases for Docker images, so you can run them as if they were native commands. It’s like Homebrew, but everything you install is packaged up in a Docker image! Can you imagine something with more overhead than that? Oh, yes, those tiny butter containers (sic!) of 10 grams each, with 6 grams of plastic per 10 grams of butter!

But these are community efforts, not corporate ones. How about these ones?

- Clear Linux, owned by Intel. The OS upgrades itself as a whole, because the apps are not part of it. Its major problem is that precompiled apps come in bundles, not as individual apps. Insufficient granularity if at all.

- Fedora Silverblue, sponsored by Red Hat. The OS itself is immutable and identical among different machines. Rollbacks of the OS are easy. Apps are installed as Flatpaks. Developers can also install CLI tools, and a hybrid image/package system is still possible, but it is recommended to avoid package layering, except for a small number of apps that are currently difficult to install as Flatpaks. This might be the future, as it makes the base OS unbreakable, and it uses packages as an exception to Flatpaks, instead of using Snaps, Flatpaks and AppImages as an exception to packages. I’m however not ready for it, as this containerization is contrary to my KISS principles.

- After Sabayon decided to stop being a buggy Gentoo derivative and to join Funtoo, the result got rebranded, and the new product, MocaccinoOS, with a Micro flavor based on Linux From Scratch and suited for cloud and containers, and a Desktop flavor meant to replace Sabayon, but layered: e.g. KDE is a “layer” called “plasma” which installs as a whole.

- Another attempt at an immutable OS base, with focus on portable AppImages, but support for Flatpaks, snaps and native packages (rlxpkg): rlxos.

LATE EDIT: There seem to be some people extremely happy with Fedora Silverblue: Switched to Silverblue, can’t look back 🙂 I have no intention to switch to such a distro philosophy, but The pieces of Fedora Silverblue is a nice attempt to make people swallow the pill: “Fedora Workstation users may find the idea of an immutable OS to be the most brain-melting part of Silverblue.” And the first comment reads, “Silverblue could actually be the future of Linux desktop.” Could, might, should, would… I might be too old for this… thing.

And there’s even more to it

Why do you think Red Hat and SUSE didn’t try hard enough to impose RHEL or SLED on everyone’s desktop? Why they don’t they care much that Fedora and openSUSE are only used by a bunch of enthusiasts and for the IT professionals who learn specific technologies? Why doesn’t Canonical promote Ubuntu like they did it back in 2004? Why don’t we have phones running Linux? (No, not PinePhones that ship directly from China to the end user!)

The messages are clear. 2017: Growing Ubuntu for cloud and IoT, rather than phone and convergence:

The choice, ultimately, is to invest in the areas which are contributing to the growth of the company. Those are Ubuntu itself, for desktops, servers and VMs, our cloud infrastructure products (OpenStack and Kubernetes) our cloud operations capabilities (MAAS, LXD, Juju, BootStack), and our IoT story in snaps and Ubuntu Core.

2019: Open infrastructure, developer desktop and IoT are the focus for Ubuntu 19.04:

Ubuntu 19.04 integrates recent innovations from key open infrastructure projects – like OpenStack, Kubernetes, and Ceph – with advanced life-cycle management for multi-cloud and on-prem operations – from bare metal, VMware and OpenStack to every major public cloud.

…

Control decisions move to the edge with smart appliances based on Ubuntu, enabling edge-centric business models. Amazon published Greengrass for IoT on Ubuntu, as well as launching the AWS DeepRacer developer-centric model for autonomous ground vehicle community development, also running Ubuntu. The Edge X stack and a wide range of industrial control capabilities are now available for integration on Ubuntu based devices, with long term security updates. Multiple smart display solutions are also available as off-the-shelf components in the snap store.

Nobody cares about what you’re running on the desktop! They’re perfectly fine with leaving Microsoft with the automatic selling of an OEM license of Windows 10 with every new computer! What they care of is servers, SaaS and PaaS! It’s all part of the Internet of Things, of Edge Computing, of the madness of the 21st century. Can we still grow potatoes without software in the Cloud?

Relevant keywords:

- Ubuntu Server.

- openSUSE MicroOS, openSUSE Kubic, SUSE JeOS.

- Fedora CoreOS, Red Hat OpenShift, Red Hat OpenStack Platform.

Containerized Linux is not only the future, it’s already the present! The old server-desktop dichotomy is obsolete.

We’re even going back to the mainframe computing metaphor, as in my opinion, the Cloudification of everything has two contradictory faces:

- Edge Computing brings more computing power to IoT nodes, thus being like a transition from the concept of “powerful mainframe, dumb terminal” to “powerful server, relevantly powerful PC”;

- SaaS, PaaS, and everything running in the Cloud goes back from “powerful PC” and “powerful server, relevantly powerful PC” to “powerful mainframe, dumb terminal”!

Actually, why would I need a fully-fledged OS when all I need is a browser, because everything runs in the Cloud? All the other remote desktop solutions aren’t much different: “the real thing” doesn’t run locally!

To me, this nullifies the previous 40 years, and the age of the Personal Computer. If nothing works when the Internet is down, you have absolutely no power whatsoever. An old MS-DOS computer was self-reliant, and a Cloud solution only has one clear attribute: it’s fragile.

Desktop-in-the-cloud solutions seem to be a new fad. If Gaël Duval’s Ulteo Online Desktop was a fiasco, Shells sells “Desktop as a Service” plans, with a special offer when Manjaro is used in the cloud!

I know they’re serious, as they’re recommended by two rappers! Take a look at their Instagram profiles here and here. La crème de la crème. Not.

But why would I want to “Run Manjaro in the cloud with any device with a browser or our desktop and mobile apps”? Ain’t this something like in the 1970s, when a dumb VT52 terminal was connected to a mainframe server?!

And how about the graphics? In my times, and I quote myself from here, video cards were simply double-buffering DPRAM interfaces to the video display, and all the calculations were done in the CPU. But today’s graphic cards are having tremendously computation power, and the video output isn’t easy on the bandwidth if the moron who’s using a graphic card that’s smarter than him wants to play the game at 144 Hz on a 4K display! And yet, there’s GeForce NOW, a cloud gaming service whereby you play games hosted on remote services and streamed over the internet to one of the supported devices.

It wasn’t enough that the entire Internet consumes 20% of the electricity produced on the planet. It’s not enough that the Bitcoin network uses as much power as the Netherlands just to exist. Now even a retarded teenager cannot play a game on his bloody computer, but rather he wants to have gazillions of terabytes transferred over the Internet for his whim!

And we, those who are a bit of Luddites, we who prefer to play music and films from local files instead of streaming them repeatedly over the Internet, we who want efficiency and KISS, we’re told to reduce our CO2 footprint?!

Closing lines

Whoever wants, like I do, to install a “classic” Linux desktop, using “classic” package management, and to have it configured light enough to run even on low-end computers regardless of how powerful the actual hardware is, must be from the old school of dreamers. It’s no wonder more and more of the people who show on YouTube their Linux desktops are 50+ or 60+. There are still those who show their “rice” on Reddit, but they’re modern hippies. Yuppies use MacBooks, and they don’t even care about the software they use.

But I do. Too much for this age when everything that made sense is forbidden or moribund.

And yet… not all hope is lost! Raspberry Pi is powerful enough to run a fully-fledged Linux desktop, and it’s not going to change to a different OS model very soon. Raspberry Pi OS is Debian, but one can also run e.g. Manjaro, one of the many other Linux distros that support arm64, or even very specific distros like RetroPie.

Also, for a distro sponsored by a company that doesn’t care much about the end user, Fedora gives mixed messages, some of which suggest they still do care. Its home page prominently lists the “official editions” Fedora Workstation, Fedora Server, and Fedora IoT, then the “emerging editions” Fedora CoreOS and Fedora Silverblue. You’ll have to scroll to the part without nice icons to reach Fedora Spins (which aren’t all that bad), Fedora Labs (which can be tremendously useful for specific usages), and Alt Downloads (not that useful). But if you go to Workstation, it won’t even tell you that this is a GNOME-only edition, and one should probably read the Fedora 34 Release Announcement to find out about the spins & stuff. Well, this might look a bit confusing to some. Either way, the funny thing is that not all the Lab images are using GNOME!

As I recently said, it’s time for me to rediscover Fedora. How much could a Luddite fall in love with it again? The last time I really liked it, Max Spevack was its leader, and this means 2006-2008.

LATE EDIT: The “primitive” isolation and containerization technologies in FreeBSD and NetBSD, compared…

…in FreeBSD from a NetBSD user’s perspective:

Jails, VMs, and chroots

FreeBSD Jails are very nice. NetBSD doesn’t really have a comparable feature, although it does have hardened chroots which are commonly used as sandboxes.

On NetBSD, there is a surprising amount of tooling for working with chroot sandboxes – my favourite is sandboxctl. It is really quite amazing for what it is, with a few commands you have a NetBSD/i386 8.0 shell on a NetBSD/amd64 9.2 host machine. It even automatically handles downloading the operating system. With FreeBSD it seems recommended to use the

bsdinstalltool (this is just the normal FreeBSD installer program) to set up jails, which is quite surprising.It is possible to apply some extra security restrictions to jails, for example, disallowing raw sockets. The restrictions seem quite similar to what NetBSD’s kauth framework was designed to handle, maybe we could build something similar on that (wink, nudge).

For VMs, I haven’t used FreeBSD’s bhyve enough to compare it to NetBSD’s NVMM/HAXM/Xen. NVMM and HAXM use QEMU, so you probably get access to more peripherals and running older operating systems might be easier due to the many decades of emulated hardware baked into QEMU. On the other hand, QEMU is this huge piece of software with documentation scattered all over the web and no man pages.

This is sooo… passé.

Heu, you really want to use a Raspberry Pi ?

Yes, maybe, but I’m not sure what the result will be… 😉

Not now, but in a distant future, retired into a cave 🙂 Raspberry Pi 400 is good value for the money.

Added an official infographic for Ubuntu’s LXD (Linux Container Hypervisor).

Added a reference to rlxos.

With all due respect, why is Rlxos noted but not GUIX Herds or NIXos Nixpkgs? I’m running GUIX baremetal on multiple PCs now… and I hear NIXos (nixos.org) would be even smoother…

KISS is not the only, or highest, principle. I want a computer so I can DO things. It would be simpler to not have a computer, but then I wouldn’t be able to do those things.

Within my computer, I need some complex apps. I wish they weren’t so complex, but they are, sometimes for convenience, sometimes just because other people have designed them in ways I don’t fully like. I don’t fully trust anything inside my system (apps, services, OS) or outside it (networking, ISP, cloud). So I want to isolate the apps from each other and from the OS and from the outside world, to varying degrees, using containers and firewalls and such. Sure, that’s not the simplest possible system. But it is a USEFUL system.

I’ve tried Snaps and Flatpaks, and mostly don’t use them now because of some bugs they have, making some app features fail. I think they’re still maturing. At some point I will get to Qubes and see how well VMs work. I’m using Fedora, so have SELinux and inbound firewall and outbound firewall (OpenSnitch).

Even with containers and isolation, the desktop shell and the OS matter. KDE Plasma with all apps running in containers would differ from GNOME with all apps running in containers. And they would differ from Windows 10 with all apps running in containers. For me, the shell differences don’t matter so much, but the OS does (see later).

I see some container issues (such as not obeying system theming) as a teething issue or bug, not something fundamentally broken in the container systems. Many of my non-container apps also ignore the system theming, especially if they’re written in something cross-platform such as Java or Electron. Even running Qt apps in a GTK desktop leads to theming issues, I think. We have a theming problem, not a container problem.

I’m willing to throw a little money at the performance issue to make it go away. I just bought a new laptop. I could have had one with 4 GB and spinning HDD and low-end CPU for $600 or something. But I’m going to use this laptop for 10+ years, I hope. So I paid twice that to get 16 GB and SSD and fastest CPU. The penalty for containers would have been slightly visible on the low-end machine; it’s invisible on the high-end machine. I tend to launch 6 big apps at startup and use them all day, so startup speed doesn’t matter. Disk space is cheap.

One way in which I do agree that “the desktop doesn’t matter” is that I find I can get my work done in any desktop shell or UI. I didn’t like GNOME so much, prefer Cinnamon or MATE or KDE, but I can use any of them. I’m not as picky as you seem to be; small glitches don’t bother me. Most of my major apps are cross-platform (Firefox, KeePassXC, Thunderbird, VSCode, Tor), so even moving to Windows or Mac wouldn’t change much about apps for me. The desktop OS does matter to me. I prefer desktop Linux because I can see and learn all the code, create new open-source software, more choices for isolation and security and privacy, commonality with cloud/server stuff.

Thanks for all the interesting articles !

I agree with the “16 GB and SSD and fastest CPU” thing for a new system, but have you noticed the ridiculous situation I’ve encountered here?

I have many more posts in the queue, but I need more time and better focusing abilities.

BTW, I believe this guy starts at $600 (+taxes). The 16 GB version with Win10 retails for €899.99 (VAT-included) in Germany.

Back in 2014, Linus Torvalds addressed the pathetic status of packaging for Linux. It’s part of DebConf 14: QA with Linus Torvalds, from the minute 5:40.

The relevant portion has been extracted here: Linus Torvalds on why desktop Linux sucks.

But no, Snap, Flatpak and AppImage are not the correct approach to fix this situation. See the example with the Sublime Text Flatpak, above.

Qemu documentation: qemu.readthedocs.io.

TC

This being posted here for what reason, exactly?!

Even smarter than Fedora Silverblue, there’s now Fedora Kinoite released with Fedora 35!

As announced alongside Fedora 35 Beta,

In my philosophy, of course this concept is anything but efficient, and far from elegant: an immutable base system with a hybrid image/package system (rpm-ostree) and apps as Flatpacks. Whoever uses tons of apps will end with a very stable, yet humongous OS, requiring an absurdly large storage space.

Yes, we’re in the times of cheap storage and cheap RAM, but this is insane! (And anti-ecological, too bad Greta is incredibly stupid, so she basically doesn’t understand what she should actually fight against.) Linux stopped being an elegant OS when it stopped being able to run on the CPUs and the typical RAM values used back in 1998. When I see how today’s distros simply cannot run decently on hardware from 14-15 years ago, this is driving me mad! I was doing much more with a fucking Pentium III and 16 MB of RAM (yeah, megabytes) than with today’s computers that use 7nm CPUs and 16 GB of RAM only because the OS itself and a fucking web browser (no matter which) require such obscene hardware!

Well, this is the future, and it’s happening now. Hallelujah!

This is the best article I have read this year. Thanks for calling it straight and assuming I’m intelligent and not the other way around. While I have much to consider after having read this, I completely understand the problem. Let’s look at it this way. A software developer’s true purpose, or perfect purpose, is to design a response to someone’s problem at an abstraction level that is higher than the problem. In order to continue to develop solutions that incorporate existing solutions, the ultimate abstraction layer has to trend toward greater abstraction, not reduced abstraction. So that is exactly what we see. Eventually the developers world will not even include code at all merely building blocks. The abstraction layer will build the code. In many ways we are already there. The frameworks exist to manipulate themselves into solutionesqe blobs. The abstraction is such that the lower levels are completely unmanned and uncoordinated by humans. How many layers does my smartphone have? I don’t really know and there is no way I could know. The phone is buried and hidden from me. First by the app developer, then the phone manufacturer, then the wireless carrier, and whoever lives in the layers in between those layers. Is my device storage even owned by me? I thin it is. But you know what? It really isn’t. What’s inside that 500 MB app? Not only do I not get to know, but I don’t NEED to know. It’s above my paygrade. It may be 495 MB of Zero’s but I’ll never know. It’s storage that I have bought and paid for yet that I don’t control. More of that on the way you can bet on it.